Quick pyspark env setup

spin up pyspark env using docker

The best way to try pyspark is using jupyter-notebook. jupyter officially has provided a lot of docker images, jupyter/pyspark-notebook is the one inside. Start it up by one of below commands:

#1 binding jupyter port to 8888

docker run -it -p 8888:8888 jupyter/pyspark-notebook

#2 mount a host dir inside, so you can persistend the notebooks

# and also can access the data outside.

docker run -it --rm -p 10000:8888 -v /your/data:/home/jovyan/work jupyter/pyspark-notebook

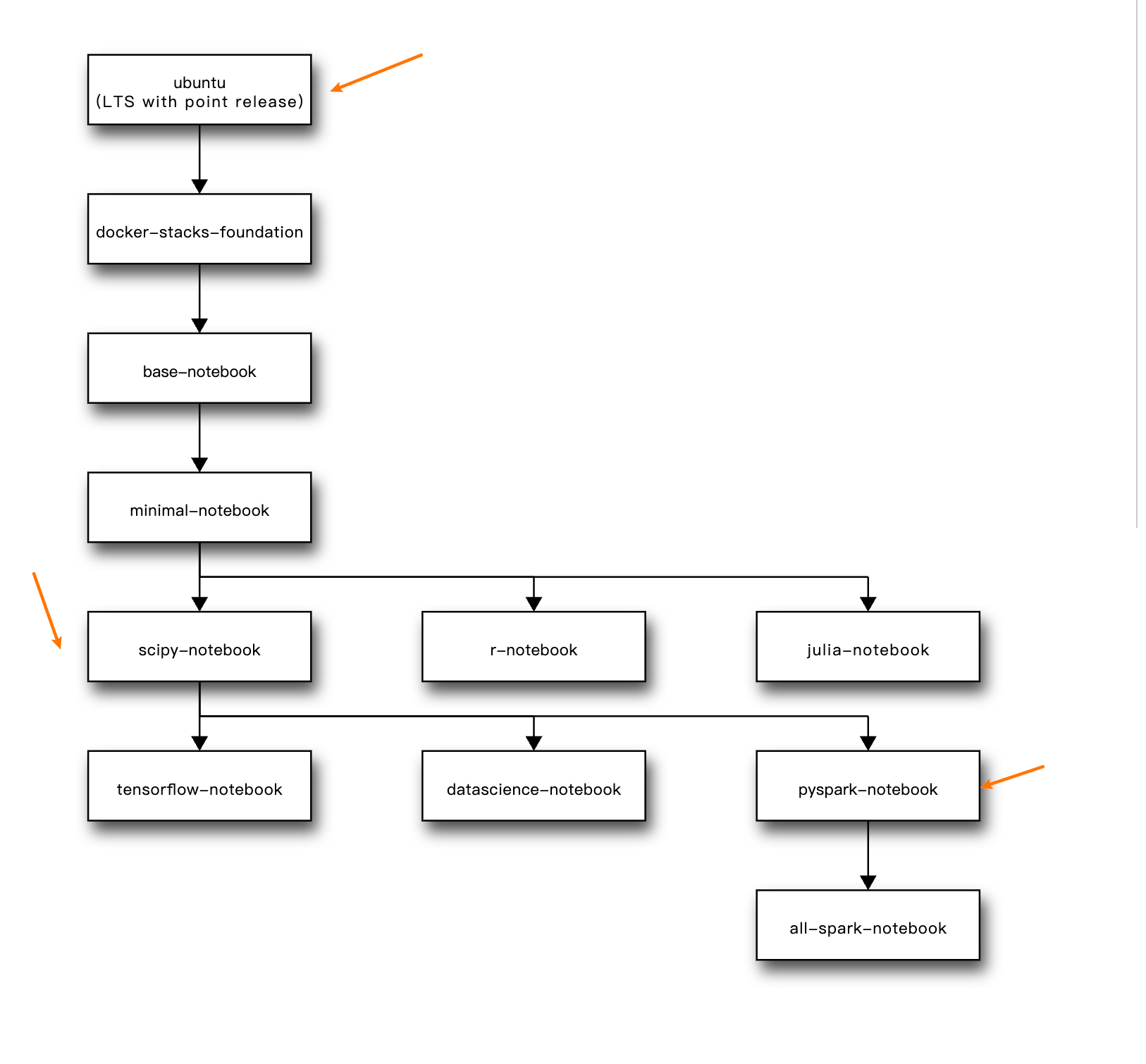

Jupyter image is built one upon another on base OS Ubuntu. Below is the hierarchy graph, we can see that pyspark is a child of scipy-notebook which means a lot of python libraries have been preinstalled. That’s pretty good, we can use them without installation right now.