Spark read csv with comma inside column

double quote the field with comma

val spark = SparkSession

.builder()

.appName("test")

.master("local[*]")

.getOrCreate()

import spark.implicits._

val csv = "a,\"b ,c\",d,"

val ds = Seq(csv).toDS

val df: DataFrame = spark.read

.csv(ds)

df.show()

df.write

.option("emptyValue", "")

.option("ignoreLeadingWhiteSpace", value = false)

.option("ignoreTrailingWhiteSpace", value = false)

.mode("overwrite")

.csv("/tmp/output")

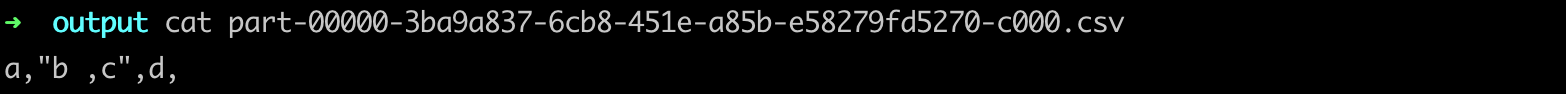

Above results show that comma inside double quotes will not be used as a separator. Also pay attention to the options writing csv, they are important to make the output consistent with the input.

Note that if there is leading space before double quote, the comma inside will be considered a separator again coz ignoreLeadingWhiteSpace is false in reading by default.